Foundations of Web Development

Scott Frees, Ph.D.

Program Director, M.S. Computer Science

Program Director, M.S. Data Science

Program Director, M.S. Applied Mathematics

Convenor, B.S. Computer Science

Convenor, B.S. Cybersecurity

Ramapo College of New Jersey

505 Ramapo Valley Road

Mahwah, NJ 07430

[email protected]

©2025

A foundational guide to modern web development—from protocols to front-end interactivity, grounded in real-world architecture and time-tested pedagogy.

This book isn’t just about HTML, CSS, and JavaScript—though you’ll encounter plenty of all three. It’s a comprehensive guide to the concepts of web development, and how those concepts span across frameworks, languages, and layers of modern full stack applications.

Written for college students, instructors, and professional developers alike, it takes a pedagogically sound, hands-on approach to learning how the web actually works—starting from the ground up. You’ll begin with the fundamentals: internet protocols, TCP/IP, sockets, and HTTP. From there, you’ll build up a working knowledge of web standards like HTML and CSS, and then dive into backend programming using JavaScript in the Node.js runtime—not because it's the only option, but because it minimizes language overhead and maximizes focus on the architecture and ideas that matter.

You won’t learn just one way to build a web app. You’ll build your own framework before adopting industry-standard tools like Express, gaining insight into routing, middleware, templating, databases, and state management. You’ll incrementally evolve a single example—a number guessing game—through nine iterations, each showcasing a deeper or more advanced feature, from form handling to RESTful APIs to reactive front ends built with Vue.js.

You’ll cover:

-

Networks & Protocols – Learn what really happens when you click a link, from TCP handshakes to HTTP requests.

-

Markup & Hypertext – Go beyond tags and learn how HTML works as the structural backbone of the web.

-

JavaScript (Server & Client) – Explore the language in a way that emphasizes conceptual understanding over syntax memorization.

-

Asynchronous Programming – Master callbacks, promises, and async/await as you build responsive, concurrent systems.

-

Databases & State – Learn how modern web apps manage persistent state with relational databases and sessions.

-

Templating & Frameworks – Understand how server-side rendering works from first principles, then leverage Pug and Express.

-

Styling & Layout – Dive deep into CSS, including Flexbox, Grid, and responsive design, before layering in frameworks like Bootstrap.

-

Client-side Development – Manipulate the DOM, handle events, make AJAX requests, and build interactive SPAs with Vue.js.

-

Security, Deployment & Infrastructure – Round out your knowledge with practical insight into authentication, encryption, and modern DevOps topics.

Whether you’re a computer science student getting your first taste of real-world development, an instructor looking for a curriculum-aligned text, or a working developer aiming to fill conceptual gaps, this book will challenge and reward you. It doesn’t shy away from the complexity of the modern web—but it does guide you through it with clarity, consistency, and context.

If you're tired of chasing trends and frameworks without understanding the foundations, this book is your starting point—and your roadmap—for becoming a thoughtful, well-rounded web developer.

Some more of my work...

Professor of Computer Science, Chair of Computer Science and Cybersecurity, Director of MS in Computer Science, Data Science, Applied Mathematics.

My consultancy - Turning ideas into working solutions and real product: building MVPs, modernizing legacy systems and integrating AI and automation into your workflows.

My work on Node.js and C++ Integration, a blog and e-book. Native addons, asynchronous processing, high performance cross-platform deployment.

Introduction

What, Who, Why

This book is not a comprehensive reference for any programming language - although you will see quite a lot of HTML, CSS, and JavaScript. This book is a comprehensive guide to web development concepts - including server side (backend) and client side (front end) development, and most things in between. We will keep our attention on the design of the web architecture, concepts that remain constant across so many of the programming languages, frameworks, and acronyms you’ve probably heard about. This book won’t play favorites - you’ll see how different architectural styles like Single Page Applications (SPA) differs from Server Side Rendering (SSR), how Representational State Transfer (REST) using JSON differs from Hypertext as the engine of application state (HATEOAS), and how conventional “roll your own” CSS can blend with full styling frameworks. This book covers the full stack.

If you are a beginner in computer science and programming, you are in for a ride - a fun one! We won’t assume you know advanced programming concepts, but we will move quickly - you will be challenged if you haven’t done much software development. One promise I can make is that you won’t walk away with shallow knowledge - we will cover concepts from the ground up, which will allow you to pick up new trends in web development as they arise - well after you are done reading this book. You won’t be taught one way of doing things, only to be left feeling lost when the next web framework becomes the new hotness of the world.

For seasoned developers new to web development, you might be surprised to learn web development doesn’t have to be the fad-obsessed, inefficient "Wild West" it can sometimes appear to be. The essentials of web development can be grounded in solid software engineering, and can be simple - if not always easy.

This book is written for university students and professionals alike. If you’ve already done some work in web development, you will likely still learn a lot from seeing things presented from a foundational perspective. Once you’ve mastered the concepts presented here, you will be better able to make use of new development trends, and make better connections between the acronym soup you encounter as you dive deeper into the discipline.

Languages and Organization

The web is programming language agnostic. The web runs on open protocols - mostly plain text being transmitted back and forth between web browsers (and other clients) and web servers. Clients and servers can programmatically generate their requests and responses using any language they want - as long as the text they are producing conforms to web standards.

You might be surprised, or even a little confused by this - especially if you've only just started studying Computer Science and the web. You've heard of HTML, CSS, JavaScript, and probably also heard people talking about Java, C#/ASP.NET, Python, Go, Rust, and a whole slew of other languages when they talk about web development. It can be absolutely befuddling... where do you start? If there isn't just one language, then which should you learn?

The other hard part about getting started with web development is that it's really hard to draw boundaries around it. Does web development include working with a database? Does it include UI design? How about distributed computing? What about queues? The answer is... yes - it probably includes everything! The reality is that a web application is a system - and depending on what it does, it could contain functionality associated with just about every branch of computer science. A typical web developer has to (or should be prepared to) integrate a lot of different sub-disciplines. In fact, the bulk of the complexity in many web applications have nothing to do with web development at all!.

In this book, we are going to try really hard to stick to purely web development, but not to the extent that you won't understand the integration points to things like UI design, databases, networks, etc.

I strongly believe there shouldn't be a distinction between web developer and software developer, and this book is written for reader who agree.

JavaScript, everywhere?

This book uses JavaScript as a server side language, running within the Node.js runtime environment. This choice is somewhat controversial - since there are wonderful frameworks and support for many programming languages on the backend. No question, the use of .NET ASP MVC, Python, Java, Rust, Ruby on Rails, Go and many others could be more than justified. The truth is that you can find just about any programming language being used professionally on the backend - and many applications use a mix of languages!

This book uses JavaScript as a server side language, running within the Node.js runtime environment. This choice is somewhat controversial - since there are wonderful frameworks and support for many programming languages on the backend. No question, the use of .NET ASP MVC, Python, Java, Rust, Ruby on Rails, Go and many others could be more than justified. The truth is that you can find just about any programming language being used professionally on the backend - and many applications use a mix of languages!

I have chosen JavaScript for no reason other than this: If you are new to web development, you must learn JavaScript for client-side browser-based development. Learning multiple programming languages at the same time obscures concepts - and concepts are what this book is about. In teaching web development to undergraduate university students for over a dozen years, I’ve found that using JavaScript limits the overhead in learning web topics. If you already know the JavaScript language, this book will give you a tour-de-force in web development concepts - without needing to learn a new language. If you are new to JavaScript, this book should give you enough of a primer while teaching you the backend such that by the time we cover client side programming, you’ll be able to focus on concepts and not syntax. Once you learn the concepts of web development, you won’t have trouble moving to other languages on the backend if you prefer.

There are other arguments made for JavaScript on the backend, such as sharing code between server and front end runtimes, and the suitability of JavaScript’s I/O model for backend web development. These arguments have some validity, but they aren’t universally agreed to by any stretch. We use JavaScript here for no other reason but to flatten the learning curve.

On the front end, there are of course other paradigms beyond JavaScript. There is no question that JavaScript has some rough edges, and until very recently lacked many language features that support solid application development. Still at the time of this writing (and well beyond I imagine!), JavaScript is not a strongly typed or compiled language - and those attributes alone rub some the wrong way. TypeScript is a widely popular derivation of JavaScript, adding many features such as strong typing and better tooling to JavaScript. Like many of it's descendent (or inspirations), such as CoffeeScript, TypeScript compiles to plain old JavaScript, so it can be effectively used to write both backend and front end applications.

WebAssembly continues to grow in popularity and promise, allowing developers to run many different languages within the browser. At the time of writing, WebAssembly supports executing C/C++, Rust, Java, Go, and several other performant languages directly within the browser - bringing near native performance to front end code. The caveat, for the time being, is that WebAssembly code executes this code in a sandboxed environment that does not have access to the browser's document object model (DOM) - meaning interacting seamlessly with the rendered HTML is not yet achievable.

This book will only touch on the above alternatives for front end development, sticking with plain old JavaScript instead. Once again, this decision is rooted in the learning curve. The aim of the book is to teach you how web development works, and whether you are writing JavaScript, TypeScript, or WASM-enabled C++/Java/Rust/etc - front end development is still front end development - so we are going to stick with the most straightforward choice - JavaScript here.

Organization

This book teaches web development almost in the order in which things developed - first focusing on networks, hypertext, markup and server side rendering. You will be introduced to JavaScript early on when, just before we begin processing input from users. We will build our own frameworks around HTML templating, databases, routing, and other common backend tasks - only to have our homegrown implementations replaced with Express. The Express framework was chosen for its relative stability and ubiquity, among the many frameworks in use within the Node.js ecosystem.

Only after we have a full web application up and running do we begin to turn our attention towards styling and interactivity. CSS is introduced approximately midway through the text book, and client side JavaScript makes up the majority of the final half dozen chapters. This book will show you the differences between traditional web applications, single page applications, and cover hybrid approaches that adhere to Hypertext as the engine of application state (HATEOAS) philosophy, while still providing interactive (and incrementally/partially rendered) user interfaces. Along the way, we will cover things like local storage, PWAs, web sockets, and reactivity.

The Appendices and Perspectives sections at the end of the text are optional components aimed towards filling in some of the details different readers may be wondering about. The goal of the entire textbook, in fact, is to do this that - fill in the gaps - by providing a comprehensive overview of web development.

The Field of Web Development

Web applications are just software applications, with networking.

Maybe more specifically, they are software applications with networking separating the user interface (the part people see and click on) and the business logic. No matter what languages you use, the general design of the frameworks you will find are pretty much the same. The industry is very cyclical, and very susceptible to buzzwords and trends. For example, I've witnessed several iterations away and back to server-side rendering. I've witnessed front end development changing to require it's own application and build structure, separate from the rest of the application; and I've witnessed a revolt against this taking hold - perhaps returning us to simpler architectures.

For a long time, web development was thought of as a lesser sub-field of computer science. Real programmers built "big" programs that had their own UI's and were written in C++ and Java. Toy web sites had some JavaScript, and were written in "broken" scripting languages like Perl and php. Real programmers couldn't be bothered with creating applications for the web, and even if they wanted to, web browsers were such a mess that it was too expensive and error prone to pull off. Times have changed, and few think of web development as lesser anymore. It's been a fascinating ride.

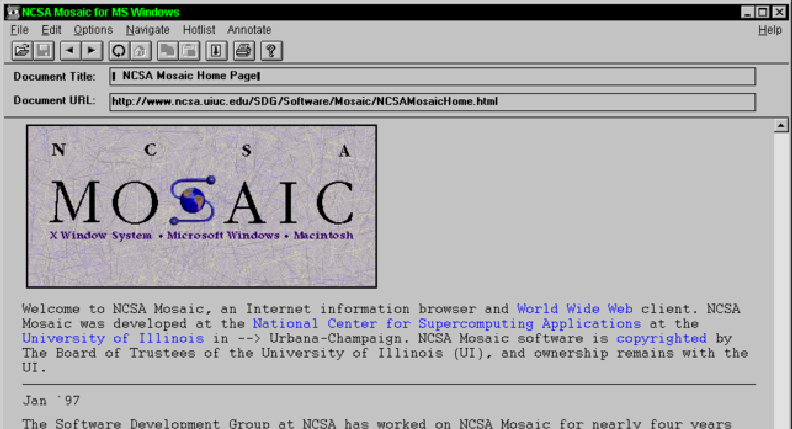

The change started to take hold in the early 2000's. While it took a long time, the dominance of Internet Explorer waned, and the competition among browsers fostered improving web standards. Better standards meant web developers had a better chance to make their app work well on everyone's machines. Browsers like Chrome also got way faster, and way more powerful - making it worth everyone's time to start looking at what they could do with JavaScript. Suddenly, real application were starting to be delivered on web technology - driving more development focus into those same technologies. HTML got better. CSS got a lot better. JavaScript grew up.

Along the same time as all these nice things were happening on the front end, back end (server-side) development was changing too. The first web application were written in a way most wouldn't recognize - actually instantiating new processes and running entire programs to respond to each request. These programs could be written in any language, and a web server would handle the networking and invoke the appropriate (compiled) program to create the network response. Languages like php and ASP, and later Java extended this model, allowing server side applications to be written as one process in it's own containers. These containers handled a lot of the web-specific plumbing, like making parsing / writing HTTP much easier. They all focused on different ways of allowing developers to generate HTML responses programmatically, and they all took somewhat different approaches. There was little separation of concerns - the business logic, HTTP processing, HTML generation, and other aspects of the programs were highly integrated. Applications written in different frameworks looked completely different from each other, even if they largely did the same thing.

Ruby on Rails - or just "Rails" - was released in 2004, and things changed. Rails took a number of huge leaps in how server side frameworks worked. Rails pioneered and/or refined rapid application development on the server, using command line interfaces to build out routes, controllers, and views. Web applications began to be more modular, and composable. It worked with view template engines to separate view generation from business logic. It didn't invent the MVC pattern, but it was really the first web framework to truly deliver on the MVC promise. We'll talk a lot more about it later in this book.

By the late 00's, and throughout the 2010's, both of the above trends just strengthened. Web standards and browser performance led to more developers doing more things client side, in JavaScript. As this happened, developers wanted better tooling, better dependency management, better UI frameworks - so they built them. Server side, developers loved how Rails was designed, but they wanted to use their favorite programming language - not just Ruby. Server-side frameworks heavily influenced by Rails emerged - Django (Python), Laravel (PHP), Grails (Groovy/Java), Express (Node.JS), and many more. Even .NET was on board - releasing ASP.NET MVC - very much in line with the Rails design.

Modern web development has benefited by a virtuous circle - as tools and languages and standards improved, the amount being done on the web grew, which demanded even better tools, languages, and standards. The explosion of different devices accessible to people also created huge demand for standards. Today, nearly every software application we interact with - whether it's through a traditional web browser or through an app on our phone - is a web application. In many respect, today, web development is software development.

The landscape

We are eventually going to focus on a slice of web technologies (our "stack"), but it's important to have an understanding of how things fit together. We've been throwing around some terms that need explanation:

Front end

Front end development refers to all the code used to display the user interface to a user - wherever that user interface might live. In most cases (in the context of this book), this is the web browser. The web browser must draw the user interface (the graphics, the user interface elements, etc.) using code delivered to it from the web server. The code delivered tells it the structure of the web page, the styles of the page, and the interactivity of the user interface.

On the front end, we generally have three languages for these three aspects:

- Structure: HyperText Markup Language (HTML)

- Style: Cascading Style Sheets (CSS)

- Interactivity: JavaScript

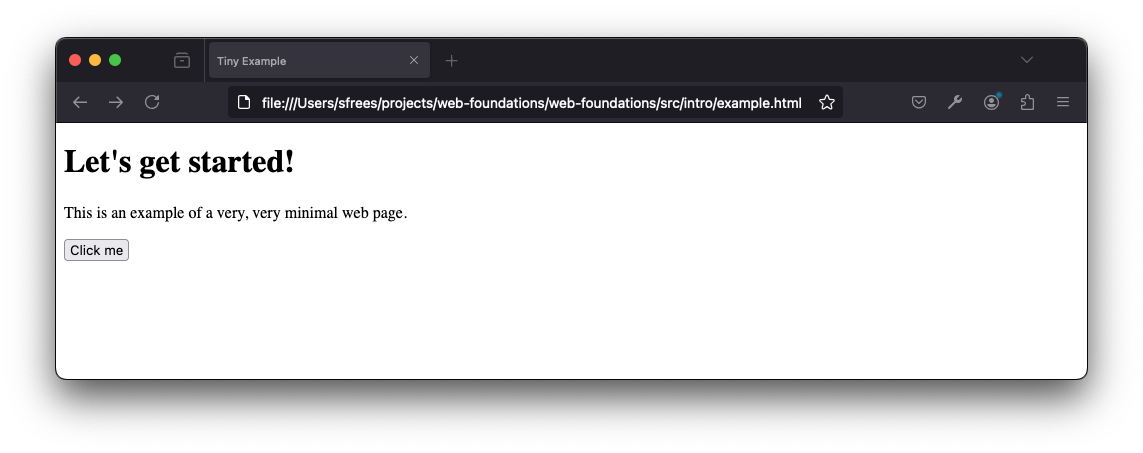

Let's look at a tiny example, a simple web page that has a bit of text, and a button.

<!DOCTYPE html>

<html>

<head>

<title>Tiny Example</title>

</head>

<body>

<h1>Let's get started!</h1>

<p>This is an example of a <span>very, very</span> minimal web page.<p>

<p>

<button type='button'>Click me</button>

</p>

</body>

</html>

Without getting stuck on any details, understand that the above is HTML code. It is defining a page with a heading, some text, and a button. It's the structure of the page. We'll spend lots of time talking about HTML later.

How does this get displayed to a user? The answer is important, and be careful to understand it. The HTML, as text, must be loaded into a web browser, somehow. If you take the text, and you save it in a file called example.html on your computer, you can load it in your web browser by simply double clicking on it. It will look something like this:

Notice what is shown in the URL address bar.

file:///Users/sfrees/projects/web-foundations/web-foundations/src/intro/example.html

The browser has loaded an HTML file directly from the file system and displayed it. To display it, it parsed the HTML into it's own internal representation and invoked it's own drawing/graphics commands to render the page according to HTML specifications.

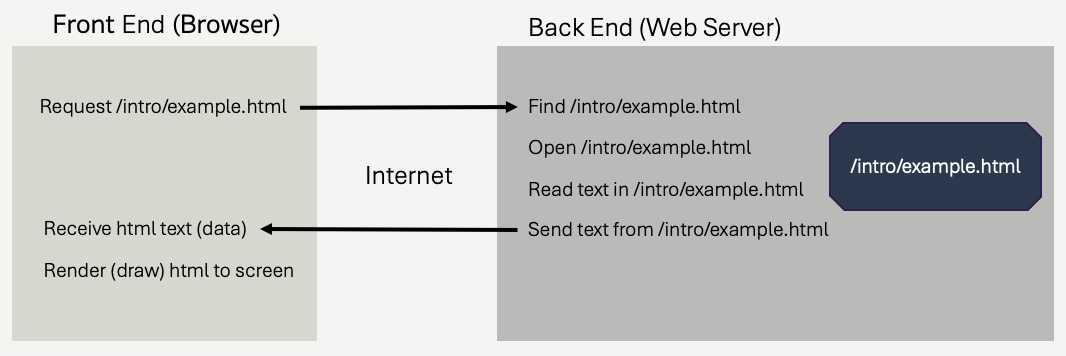

While that's OK, you must understand that this is not the way the web works. HTML files that appear in your web browser are not stored on your own computer in most cases. In most cases, they are stored on some other machine, on the internet!

This brings us to our first shift away from "front end", and to the back end (and the networking in between). We are going to refine our understanding of this over and over again, here we are going to keep things very high level.

Back end

Type the following into your web browser's address bar:

https://webfoundationsbook.com/wfbook/intro/example.html

The same page loads, but this time, that file didn't come from your own computer. Delete example.html from your own machine, if you don't believe me. Instead, it came from a different machine - webfoundationsbook.com. When you typed the address into your web browser, the web browser connected to webfoundationsbook.com, it sent a specially crafted message (as you'll see soon, crafted with HTTP) asking webfoundationsbook.com to send the text contained in a file found at /intro/example.html on webfoundationsbook.com's hard drive. That text was then parsed and rendered by the browser, just the same.

In order for that to all work, that means some program must be running on the webfoundationsbook.com computer. That program is accepting connections and requests from other machines. It's decoding the requests, finding the file requested, opening the file, and sending the contents of the file back to the connected browser! That program is a web server.

Some of the most common web servers for doing this (and much more) are apache or nginx. We will see more of those later on in this book.

The browser's pseudocode, vastly simplified, might look something like this.

// Pseudocode for the web browser

// Suppose we can access the values in the UI through

// through a built-in browser object

response = send_http_request(browser.address_bar.value);

// The response object might have a body attribute, containing

// the HTML text that was returned by the server.

render(response.body);

Here's some pseudocode (missing vital error handling!) further illustrating what is happening on the server.

// Pseudocode for a web server

// Suppose we have function to read a request off

// the network, from a browser

request = recv_http_request();

// Suppose the request object returned has a path

// property, corresponding to /intro/example.html

// when the browser requests https://webfoundationsbook.com/intro/example.html

file = open_file(request.path);

// Read the html from the file, as plain text (character buffer)

html_text = file.readAll();

// Use a function to send the data back to the browser

send_http_response(html_text);

So, already, we see there are typically two programs involved - (1) a web browser and (2) a web server. The web browser asks for and receives front end code from the server - in this case html. The web server is responsible for generating that text - in this case, simply by reading the example.html file from it's own file system. Once the web browser receives the HTML code, it uses it to draw the page to the screen.

If you are wondering, web browsers and web servers can be written in literally any programming language. Most web browsers are written in C/C++, and some have at least some components written in other languages like Rust. Web servers, especially the top level ones (we'll explain what that means later) are also often written in C/C++. It's important to remember, they are just ordinary programs, they read files, they make network connections (sockets), they parse and generate specially formatted text, they draw things (browsers, not servers).

Return to the front end - Styling

So we've established that HTML code is delivered to a web browser, usually from a web server. That HTML code defines the structure of the page. Web browsers use standard conventions to draw HTML to the screen in expected ways. Looking at the HTML we were using, notice the text that is wrapped in the <h1> and <button> elements. They look different than the other bits of text wrapped in <p> and <span>. h1 is a heading, button is pretty obviously telling the browser to draw a button. p is a paragraph and span is a text span within a paragraph that can be styled differently (but isn't yet). This is the structure of the page - it's contents.

Front end code also is used to define style and interactivity. Let's add just a bit of style, by making the heading's text underlined, and the span's text blue. We do this by adding Cascading Style Sheet (CSS) rules. CSS is a language unto itself, and we will study it in several future chapters - but for now, we will just embed it right into our HTML code.

<!DOCTYPE html>

<html>

<head>

<title>Tiny Example</title>

<style>

h1 {

text-decoration:underline;

}

span {

color:blue;

}

</style>

</head>

<body>

<h1>Let's get started!</h1>

<p>This is an example of a <span>very, very</span> minimal web page.<p>

<p>

<button type='button'>Click me</button>

</p>

</body>

</html>

All the magic is happening within the style element - we've used CSS syntax to tell the browser to style h1 elements and span elements a bit differently. Go ahead and load the following in your web browser, no surprises - just some styling.

https://webfoundationsbook.com/intro/example-style.html

CSS can be used to define all aspects of the visual styling and layout of HTML content. It's an immensly powerful language, that has undergone incredible cycles of improvements over the decades since it's introduction. While there were some early competitors, no other language is used to style HTML these days - CSS is the language. All browsers support CSS (at least, mostly).

Since visual styling is so important, it shouldn't be surprising that CSS styling code can grow - it can become a huge part of the front end development efforts. If you have any experience in computer science and software engineering, you know that we like to reuse code. CSS is no different - reusing and modularizing CSS is important when creating maintainable web applications. Moreover, not all of us are artists - we aren't all trained in good UI practices. It shouldn't be surprising that there are libraries and frameworks that contain vast quantities of CSS code designed by people who are really good at designing visual systems, and that these libraries and frameworks are often freely available.

Here are a few example of CSS libraries and frameworks that are commonly used. The list isn't exhaustive, but hopefully it gives you an idea of how they fit into the web application landscape if you've heard about them. They are just CSS, they are added into your HTML to provide the web browser styling instructions.

- Bootstrap - likely the most widely used framework, this has been around for a long time. Provides full styling of everything from text, navigation toolbars, dialogs, and more. We will spend some time looking at this in more detail in later chapters.

- Foundation - similar in aims to bootstrap, Foundation provides full styling of most user interface components.

- Tailwinds - takes a different approach compared to Bootstrap and Foundation, in that it focuses on composable CSS styles rather than full user interface components. This gives designers more control, but can also be harder to get started with.

- Simple.css - lightweight CSS framework that provides an extremely minimal set of stylings for HTML elements. Theses types of frameworks are really nice for rapid development, because they don't require you to add much to your HTML at all. Their goal is to get things looking "good" immediately, and then you can add more later.

There are also more specialized libraries defining styling. By more, I mean thousands. They are all just CSS, that get added to your front end HTML code. Here are some two interesting ones, just to show how varied they can be.

- United States Web Design System - this is the standard CSS frameworks for use on United States government web sites. Many other countries have similar frameworks. The goal is to provide extremely high quality out-of-the-box accessibility.

- NES.css - all the way on the other side of the spectrum, here's a CSS library that simply styles all your HTML so the page looks like it's from the Nintendo Entertainment System from the 1980's. It's fun, but certainly not general purpose!

Front end interactivity

The page we've been looking at is static. Once it's shown on the screen, it doesn't change. The HTML and CSS are delivered to the browser, and that's that. What if we want something to happen when we click that <button> element though? This is where we can add some interactivity. Interactivity on the web generally means creating code that alters the HTML or CSS currently loaded in the web browser, causing something to change. It can mean more, but for now that's a good enough description.

Let's add some interactivity. When the user clicks the button, we are going to add some content below the button and change some of the CSS attached to the span element.

<!DOCTYPE html>

<html>

<head>

<title>Tiny Example</title>

<style>

h1 {

text-decoration:underline;

}

span {

color:blue;

}

</style>

</head>

<body>

<h1>Let's get started!</h1>

<p>This is an example of a <span>very, very</span> minimal web page.<p>

<p>

<button type='button'>Click me</button>

</p>

<script>

// Change the "very, very" to red, and add a new text snippet

// with a random number, and remove the button so it can't be clicked

// again!

document.querySelector('button').addEventListener('click', () => {

document.querySelector('span').style.color = 'red';

const n = Math.ceil(Math.random() * 10);

const p = `<p>Random number generated client side: ${n}`;

document.querySelector('p').innerHTML += p;

document.querySelector('button').remove();

});

</script>

</body>

</html>

Go ahead and check it out. When you click the button, something really important is happening - the JavaScript inside the script element is changing the HTML itself, using what is called the Document Object Model (DOM). The span is given a new CSS value for color. A new p element is created and appended to the last p element in the document, with a random number within the text (it's different every time you load the page). The button is removed entirely. Notice, the browser changes what is rendered as the JavaScript changes the DOM elements. The DOM elements are what the browser renders - they are the internal representation of the HTML loaded by the browser.

It's important to understand that the JavaScript code that modified the HTML DOM is running inside the web browser. The web browser, in addition to a renderer, a JavaScript runtime environment! The server is not involved in anything that we just did, it has no idea anyone has click a button, or that any HTML has been modified. It all happened within the browser's internal representation of the HTML the server sent to it.

Interactivity on the front end, using JavaScript could (and most definitely is) be the subject of entire books, entire courses, and entire careers. As you might imagine, there are a huge number of frameworks that help developers write JavaScript to add an enormous amount of interactivity to HTML. You've no doubt heard of some.

- jQuery - probably the first and most broadly used JavaScript framework, in many ways it revolutionized how we wrote JavaScript. jQuery was created in 2006, when JavaScript suffered from a very poorly standardized DOM API, meaning writing JavaScript to interact with the HTML DOM (change things on the page) needed to be written differently depending on the browser. THis was also a time when Internet Explorer was still quite popular, but Chrome, Safari, and Firefox were too large to be ignored. jQuery created a very powerful API that smoothed over the differences. It inspired iterations to JavaScript itself, which later became part of the standard web APIs across all browsers. jQuery isn't often used these days, because JavaScript has evolved enough that it's no longer necessary - but it's impact is still felt.

- React - released in 2013, React became the most popular reactive framework/library very quickly, and has remained so through the time of this writing. React focuses on component design, and has offshoots like Reach Native which aid in mobile application development. The concept of reactivity centers around how developers map application state (usually state is represented by JavaScript objects) to HTML DOM changes. Reactive frameworks allow the developer to modify state variables, and those changes are automatically applied to the DOM based on declarative rules. This is very different than the procedural approach in our JS example above, where we directly modify the DOM. There are many reactive frameworks, the concept is extremely powerful.

- Vue - released in 2014, Vue is similar to React in terms of it's model of development. A proper Vue app manages front end application state, and automatically modifies the DOM based on those state changes. It has what many people feel is a shallower learning curve than React, and we will use it when we dive deeper into reactive frameworks and single page application design later in this book.

- Angular - AngularJS was initially released in 2010, and rewritten (and renamed to Angular) in 2016. Angular shares a lot of design principles with React and Vue, along with other predecessors like Ember and Knockout.

There are lots and lots of other front end JavaScript libraries and frameworks. Some are large, some are very small. While we won't dive too deeply into them, we will learn the fundamentals of JavaScript on the client (front end) in depth, and you'll be able to pick many of these frameworks up pretty quickly once you've mastered the basics.

Back to the Back end

We could have a web site just with HTML, CSS, and JavaScript. You could have lots of HTML pages, link them together, and use CSS and JavaScript to do a lot interesting things.

We could write our own HTML, CSS, and JavaScript in a text editor, and use a SFTP program to transfer those files to a simple web server that can map network requests from clients to these files. Those files are then transmitted to the browser for rendering. This is in fact still very viable, it's probably still how most web pages are delivered.

However, there is something missing. Our pages are still static in that they are always exactly the same, whenever they are loaded into the browser. Sure, our front end JavaScript might change the DOM later, but it's always exactly the same HTML, CSS, and JavaScript being delivered to the browser, because we are just serving up files.

As a little thought experiment, what if we rewrote the server pseudocode from above so we didn't use a file at all?

// Pseudocode for a web server, without a file.

// Suppose we have function to read a request off

// the network, from a browser

request = recv_http_request();

if (request.path == '/intro/example.html') {

html_text = "<!DOCTYPE html><html><head><title>Tiny Example</title></head>";

html_text += "<body><h1>Let's get started!</h1><p>This is an example of a ";

html_text += "<span>very, very</span> minimal web page.<p><p>";

html_text += "<button type='button'>Click me</button></p></body></html>";

send_http_response(html_text);

}

else {

// send some sort of error, we don't have anything for this path...

}

If you look closely, the web server is sending exactly the same text to the web browser when the browser requests /intro/example.html as it was before. The difference is that instead of getting the HTML text from a file saved on disk, the web server is just generating the HTML using string concatenation. It's ugly, but it works - and in fact, the browser cannot tell the difference.

Why would we do this? The answer is simple, and profoundly important. Now, since we are generating the HTML inside a program, we have the freedom to create different HTML whenever we want. We can fetch data from a database, and include that data in the HTML. We can perform any number of computations, interact with any number of data stores and systems, and use any other mechanism to customize the HTML delivered to the browser. We now have the ability to create a fully customized HTML response to /intro/example.html if we please.

To drive this point home a little more, let's generate a random number and put it in the HTML sent to the browser.

// Pseudocode for a web server, without a file.

// Suppose we have function to read a request off

// the network, from a browser

request = recv_http_request();

if (request.path == '/intro/example.html') {

html_text = "<!DOCTYPE html><html><head><title>Tiny Example</title></head>";

html_text += "<body><h1>Let's get started!</h1><p>This is an example of a ";

html_text += "<span>very, very</span> minimal web page.<p><p>";

html_text += "<button type='button'>Click me</button></p></body></html>";

send_http_response(html_text);

}

else if (request.path == '/intro/example-style-js.html') {

number = Math.ceil(Math.random() * 100);

// The beginning is just static text content

html_text = "<!DOCTYPE html>";

html_text = "<html>";

html_text = " <head>";

html_text = " <title>Tiny Example</title>";

html_text = " <style>";

html_text = " h1 {";

html_text = " text-decoration:underline;";

html_text = " }";

html_text = " span {";

html_text = " color:blue;";

html_text = " }";

html_text = " </style>";

html_text = " </head>";

html_text = " <body>";

html_text = " <h1>Let's get started!</h1>";

html_text = " <p>This is an example of a <span>very, very</span> minimal web page.<p>";

// Here's the dynamic bit, with the server generated number in the text.

html_text = " <p>The server generated number is: " + number + " </p>"

// The rest is static again.

html_text = " <p>";

html_text = " <button type='button'>Click me</button>";

html_text = " </p>";

html_text = " <script>";

html_text = " document.querySelector('button').addEventListener('click', () => {";

html_text = " document.querySelector('span').style.color = 'red';";

html_text = " const n = Math.ceil(Math.random() * 10);";

html_text = " const p = `<p>Random number generated client side: ${n}`;";

html_text = " document.querySelector('p').innerHTML += p;";

html_text = " document.querySelector('button').remove();";

html_text = " });";

html_text = " </script>";

html_text = " </body>";

html_text = "</html>";

}

else {

// send some sort of error, we don't have anything for this path...

}

Right about now, you may be getting a sick feeling in your stomach. We are writing code, inside code. Worse yet, we are writing code (a mix of HTML, CSS, and JavaScript) inside plain old strings, and using concatenation to build it all up. This is a tiny example. If you feel like this won't scale well up to real web applications, you are 100% correct!

Now we've arrived at the land of back end frameworks. Server side, backend web frameworks handle the following types of things (and many more):

-

HTTP parsing / formation - we side stepped this by imagining we had functions like

recv_http_requestandsend_http_response. In reality, these types of functions will be part of a web server framework/library, and will be doing a ton of work for us. -

Path routing - we have the beginning of routing in our last example, where we use

ifandelse ifstatements to determine which response to generate based on the requested path. Routing is a major part of web development - the server needs to respond to many many different paths (urls). Web frameworks will provide methods of organizing your code into functions, objects, and modules that map to specific paths/urls, and the framework will ensure the right handlers are called at the right time. -

View transformations - we aren't going to generate HTML with strings. We are going to build objects of data programmatically (models), and then use templating engines to transform the data into HTML (views)using a template language. It's a mouthful, but when we get there, you will see how much easier it makes things! There are tons of templating languages, and most do pretty much the same thing. If you've heard about ejs, Jinja, pug, HAML, Liquid, Mustache, or Handlebars... they are all templating languages with large following in the web development community. We'll talk about pug in more detail later. Once you learn one, the others are very easy to pick up.

Full featured web frameworks tend to cover #1 and #2, and typically will let you choose which templating language (#3) to use. Modern frameworks are available in just about every programming language you can think of. Most modern frameworks support the Model-View-Controller (MVC) Architecture - which we discussed a bit above. MVC is a way of organizing the application in a way that separates model (the data), the view (HTML generation), and the business logic (also called controller).

It's hard to say if one is better than the other - there tends to be a few good choices for each programming language. Which programming language you choose is probably more of a decision based on you and your teams skills, and preferences - rather than anything specific to the web.

Here's a sampling of some popular backend web frameworks. Each of these covers all of the above, and often includes more. Note that your choice of a backend framework has nothing to do with anything we've discussed about the front end. They are completely separate!

- Python

- Java (and JVM languages)

- Rust

- Ruby

- PHP

- C++

- .NET

- JavaScript (Node.js)

We'll discuss frameworks in depth later in the book.

Pro Tip💡 You don't want to describe yourself as "a Django developer" or "Laravel developer". You want to learn backend web development and be comfortable in any language or framework. You want to call yourself a web backend developer - or better yet - web developer. Specialization is marketable, and valuable, but you never want to pigeonhole yourself into one framework - it advertises a lack of breadth.

In-between and outside

We've glossed over the in between part, the technology that connects the front end and back end. That's networking, and that is HTTP. We will cover that extensively in the next few chapters!

Outside the typical discussion of front end and back end development are all the systems components and concerns that tend to make up web applications of any complexity. This includes security, understanding TLS/HTTPS, hashing, authentication, CORS, and more. This includes databases of all kinds - relational, document stores, and more. We'll also need to learn about hosting, content delivery, and deployment. It's a lot of ground to cover, and there are chapters dedicated to these topics later in the book.

Breadth & Depth

The goal of this book is to give you enough breadth to understand how all of the pieces of web development fit together. You'll understand the fundamentals in a way that allows you to pick up new frameworks quickly. You will understand the entirety of full stack web development.

The second goal of this book is to give you depth along a particular set of frameworks/libraries so you can build a full scale web app from the ground up. You will understand how front end and backend frameworks work at a low level, and then see how we apply layer after layer until we reach modern framework functionality. We'll choose specific frameworks at each step - for bot the front end and back end - and get a lot of experience using them.

About the Author

I'm Scott Frees - the author. I've been teaching Computer Science at Ramapo College of New Jersey since 2006. I am currently a Professor of Computer Science, and the Director of the Master's in Computer Science, Applied Mathematics and Data Science degrees at Ramapo. I am also the chair of our Computer Science and Cybersecurity departments.

I completed my PhD at Lehigh University in 2006, where I developed new ways for interacting within immersive virtual environments. Virtual Reality has come a very long way since I was doing this work (that's me below).

While completing my PhD, and ever since, I have been an active consultant and freelance developer. It's a passion of mine, because what I really like about computer science is building things people actually use. I've always liked user interface design, but I've also been drawn to building systems - the backend of a software application. Freelance development has given me the opportunity to do all this, in a lot of different domains. I've created software to design oil and gas industrial equipment, track and detect aircraft using 3D cameras, and analyze genomes. I've gotten to develop computer vision-enabled security camera systems, IoT network protocols, mobile apps to manage construction sites, finance/trading platforms, and a bunch of other smaller (and just as fun) projects. Every one of those (except the aircraft detection...) has been a web application at it's fundamental core - but they've all contained a boatload of complexity outside of what is typically considered web development. This is why I think it's so important that every software developer learn web development - it broadens your impact, and it doesn't take away from developing large systems.

I've tried to combine my passion for building things with my love of teaching. In 2015 I created a blog and later an ebook that covered a professional niche topic that I had been doing a lot of work in - Node.js C++ integration. For anyone looking to get into freelance software development - my best advice is do something like this. It's called content marketing, and it's led to lots of opportunities for me. The time I put into writing the book has been repaid many times over from the projects that grew out of help I was providing readers.

I created the Web Development course at Ramapo College in 2007. My experience at that point was mainly in the Java world. The Java web development world in the early 2000's wasn't a wonderful place - web development was hard, and it was without a lot of best practices. The course evolved with me, over almost two decades. The course adopted jQuery for a while, and dabbled with ASP.NET (mainly because we had a C# course that our students already took, making .NET attractive). By 2014, the course had shifted to Node.js as the backend language with Express as the framework of choice. The course design was innovative at the time, fully leveraging the Node.js ecosystem and the MEAN stack to teach undergraduates. Since then, I've stopped covering the M in the MEAN stack (MongoDB), as the industry (and me) have circled back to the tried and true relational database for most use cases. The A in MEAN has also gone away - I don't teach Angular anymore (or jQuery). Modern front end JavaScript is vastly superior to what it was when I started, and most of what we cover in class is now just straight up JavaScript - although I still cover modern reactive frameworks to some extend (i.e. Vue).

If you are a software developer who likes to have people use your work, then you should consider becoming a web developer ;) Targeting the web lets you deliver your work to everyone, directly. It's an amazing experience to have people really use your work - and my hope is that this book can be a helpful tool for you along the way to reaching that goal.

Some more of my work...

Professor of Computer Science, Chair of Computer Science and Cybersecurity, Director of MS in Computer Science, Data Science, Applied Mathematics.

My consultancy - Turning ideas into working solutions and real product: building MVPs, modernizing legacy systems and integrating AI and automation into your workflows.

My work on Node.js and C++ Integration, a blog and e-book. Native addons, asynchronous processing, high performance cross-platform deployment.

Networks

As a web developer, you typically work far above the level of Internet Protocol (IP), Transmission Control Protocol (TCP), sockets and the other underpinnings of computer networks and the internet. Typically is not the same as always, however. Moreover, having a solid understanding of how the web technologies have been built on the back of core technologies like IP/TCP gives you a huge advantage when keeping up with the ever changing field you are entering.

This chapter provides you the fundamental knowledge and skills needed, and also the perspective to not only understand the modern web and it’s tooling, but also appreciate it. Having a solid understanding of networking concepts will also come to your rescue when learning about deploying your web applications along with other devop type activities.

Network Protocols

When we say the web, it's fair to think about web browsers, web sites, urls, etc. Of course, the term "the web" is commonly used interchangeably with the internet. Truly, the internet is a lot more broad than you might realize though. The internet is a global network of computers. It facilitates your web browser accessing a web site. It facilitates email delivery. It lets your Ring security camera notify your phone when the Amazon delivery arrives. When we talk about the internet we are talking about the entire internet - which encompasses billions (if not trillions!) of devices talking to each other.

The first thing we need to understand about computer networks is the concept of a protocol. A network is just a collection of devices, sending electrical signals to each other over some medium. In order for this to be useful, we need some things:

- We need to know how to find devices to talk to

- We need to know how to translate electrical signals into useful information

There's a whole bunch of things that flow from those two requirements. It might help to first consider some real world protocols. The postal system comes to mind.

When we want to mail a physical thing to someone, what do we do? First, we need to know their address. We need to know that (at least in the United States) that addresses look something like this:

98 Hall Dr.

Appleton, WI 54911

There are rules here. On the second line, we expect the town or city. The abbreviation after the comma needs to correspond to an actual state in the US. The number after the state is a zip code, or postal code. This indicates not only a geographic area, but also a specific post office (or set of post offices) that can handle the mail going to addresses within that postal code.

Here we have the beginnings of point #1 above. There is an expectation of how an address is defined and interpreted. It's an agreement. If you think more carefully, there are more - such as where you write this address on an envelope, etc. All of the things associated with filling out an address on an envelope is part of the mail system's protocol.

We also know that our mail can enter the mail network through various places - our own mailbox, or a public postal box. From that point, there is a vast infrastructure which routes our physical mail to the appropriate destination - taking many hops along the way, through regional distribution centers, via airplane, train, truck, to the local postal office, and then to the physical address of the recipient. We intuitively know that this requires a lot of coordination - meaning all of the various touch points need to know the rules. They need to know where to route the mail!

With #1 out of the way, how does the mail system handle #2 - exchanging meaningful information? Interestingly enough, the postal system actually does very little to facilitate this. Just about the only thing it ensures (or at least attempts to) is that when you mail something to someone, they will receive the whole thing, in reasonable condition. If I mail a letter to you, the postal system's promise to me is that the entire letter will arrive, and it will still be readable.

So, how do we effectively communicate via the postal system? Well, the postal system is one protocol - for mail transport and delivery - but there is also another protocol at work. When you send a letter to someone in the mail, you implicitly make a few assumptions. Most importantly, you assume the recipient speaks (or reads) the same language as you, or at least the same language the letter was written in. There are also other commonly accepted conventions - like letters normally have a subject, a date, a signature. There are actually many assumptions built into our communication - all of which we can consider the "letter writing protocol".

Notice now that we have identified two protocols. One protocol, the postal protocol, establishes a set of rules and expectations for transport and delivery of letters. The second protocol, the letter protocol establishes a set of rules and expectations for understanding the contents of such letters.

Computer Protocols

What does this all have to do with computer networks? Computers need to communicate under a set of assumptions. All data in a computer systems is represented by 1's and 0's (see big vs little endian if you think this is straightforward). In order for computers to communicate, we'll need answers to the following:

- How are 1's and 0's encoded/decoded across the medium of transmission (copper wires, radio signals, fiber optics)?

- How is the encoded data's recipient to be represented?

- How can the data be routed to the receiver if not directly connected to the sender?

- How do we ensure the data arrives in reasonable condition (not corrupted)?

- How can the recipient interpret the data after it arrives?

Just like with our postal / letter example, all of these questions aren't going to be addressed by an single protocol. In fact, computer network protocols formally defines several layers of protocols to handle these sort of questions. The model is called the Open Systems Interconnnection - OSI model.

In the OSI model, the question of how 1's and 0's are encoded/decoded is considered part of the Physical and to some extent the Data link layers. These are the first two layers.

Layer 3 - the Network layer provides addressing, routing, and traffic control (think of that as an agreement on how to handle situations where the network is overloaded). This really covers question #2 and #3, and will be handled by the first protocol we will look at in detail - the Internet Protocol.

Our 4th question - how we ensure data arrives in reasonable condition - is actually more interesting than it might originally appear. Looking back to our postal/letter example - what do we mean by a letter arriving in reasonable condition? Clearly, if the letter itself is unreadable (perhaps water was spilled on it, and the ink has bled), it is unusable. This happens with 1's and 0's on the internet too - the physical transmission of these electronic signals is not perfect. Think about the trillions of 1's and 0's that are traveling through the air, through wires under the ocean, etc. Those bits will get flipped sometimes! This will result in a mangled data transmission.

How do we know if some of the bits have been flipped though? If you receive a physical letter in the mail that was somehow made unreadable, it's obvious to you - because the "letters" on the page are no longer letters - they are blobs of ink. In a computer system, if a bit gets flipped from a 1 to a 0, or a 0 to a 1, the data is still valid data. It's still 1's and 0's!

To drive this point home, let's imagine I'm sending you a secret number, the number 23. I send you the following binary data, which is the number 23 written as an 8-bit binary number.

00010111

Now let's say you receive this, but only after these signals travel the globe, and one digit gets flipped somehow.

01010111

You have received the number 87. The number 87 is a perfectly reasonable number! There is no way for you to know that an error has occurred!

Thankfully, we have ways of handling this kind of data corruption - checksums, and we'll cover it in a bit. This error detection is handled by the Network Protocol layer in the OSI model, and in our case will be part of the Internet Protocol.

As we will see however, detecting an error is not the same thing as handling an error. When an error occurs, what should we do? Do we have the sender resend it? How would we notify the sender? These questions are handled by the Transport layer, and will be handled by other protocols above the Internet Protocol in our case - either by Transmission Control Protocol or in some cases User Datagram Protocol.

The last question we have is #5, how can the recipient interpret the data after it arrives?. There's a lot backed in here. As you might recall from the postal/letter example, understanding the contents of a message requires a lot of mutual agreement. Is the letter written in a language the recipient can understand? Is there context to this letter - meaning, is the letter part of a sequence of communications? Does the letter contain appropriate meta data (subject, date, etc.)?

All of these issues are handled by layers 5-7 in the OSI model - Session, Presentation, and Application layers. For web development, the Hypertext Transfer Protocol protocol outlines all the rules for these layers. For other applications, other protocols define the rules - for example, email uses SMTP (Simple Mail Transfer Protocol) and file transfer applications use FTP (File Transfer Protocol) and SFTP (Secure File Transfer Protocol). Some applications even use their own custom set of rules, although this is less common. Generally, web applications will also layer their own logic and context over these protocols as well, unique to the particular use case of the application. For a web application, things like login/logout sequences, url navigation, etc. are clearly unique to the application itself. If users visit specific pages out of order, they might be "breaking the rules".

We won't cover physical networking in this book. It's a fascinating subject - understanding how 1's and 0's are actually transmitted across the globe - through the air (3G, 4G, 5G, LTE, etc), via satellites, ocean cables, etc - is a pretty heavy topic. When you start to think about the shear volume of data, and the speed at which it moves, it's mind boggling. However, as a web developer, the movement of 1's and 0's between machines is far enough removed from you that it's really out of scope. If you are interested, start by looking at the Physical Layer and then you can start working your way to all the various technologies.

As a web developer, you will be will be dealing with at least three protocols for communication in web development:

- Internet Protocol: Addressing, Routing, Error Detection

- Transmission Control Protocol: Error handling, reliable delivery of requests/responses, multiplexing

- HyperText Transfer Protocol: Encoding/Decoding of requests and response, and all of the rules of the web!

While the HyperText Transfer Protocol is the most important, the other two are still quite relevant, so we will tackle them in order.

The Internet Protocol

The Internet (capitalized intentionally) isn't the only network. It's the biggest network (by far), and really the only network used by the public today. However, if you went back in time to the 1960's, there was no reason to believe this would be the case. There were many networks - meaning there were lots of network protocols. Most of them were networks either within the defense industry or within academia. These networks weren't compatible with each other.

There was a need to have computers on different networks talk to each other - so there became a need for a standard protocol. In 1974, the Internet Protocol was proposed by V. Cerf and R. Kahn. It was quite literally devised as a protocol for communicating between networks - internet. The protocol grew in adoption, and along with a few other innovations (TCP, which we will see soon) eventually supplanted most other networking protocols entirely. In 1983, one of the largest and most important networks - ARPANET (Advanced Research projects Agency Network) switched over to the Internet Protocol. The network of computers that communicated using the Internet Protocol grew and grew. By the 1980's, the internet (not capitalized) was how people talked about the network of computers speaking the Internet Protocol. By the early 1990's, web technologies were running on top of the internet, and the rest is history.

So, what is the Internet Protocol? First, we'll call it simply IP from now on.

The first thing to understand is that the IP protocol is implemented primarily by the operating system on your computer. The IP protocol defines the fundamental format of all data moving through the internet. Thus, data encoded as IP data goes directly from memory to the network device of a computer - and out to the internet. The operating system generally limits access to network devices, and so you may interact and use the IP protocol via the operating systems API's.

IP provides two core facilities:

- Addressing

- Message Chunking & Error Checking

If you've heard of an IP address, then you know a little about IP already! We are going to go in reverse order though, starting out with message chunking - or what are referred to as packets.

IP Packets

IP messages are chunks of data that an application wishes to send to another. These messages are of arbitrary length, they are defined by the application doing the sending. An application transferring files might send an image as an IP message. A web browser might send an HTTP request as a message.

Sending arbitrary length 1's and 0's creates a bunch of problems. First, from a device design and software design perspective, dealing with fixed length chunks of data is always more efficient. Second, depending on the devices receiving (or more importantly, forwarding) the messages, arbitrarily long message may create electronic traffic jams, network congestion. To mitigate this, IP slices all messages into fixed length packets.

An internet packet is a fixed size chunk of binary data, with consistent and well defined meta data attached to it. This metadata will contain addressing information of both sender and receiver, along with a sequence number identifying where the packet is within the original larger message.

The Internet is, at it's core, a peer to peer network. Every machine on the internet is considered an IP host, and every IP host must be capable of sending, receiving, and forwarding IP packets. While your laptop or home computer is unlikely to be doing a lot of forwarding, forwarding IP packets is a critical design feature of the internet. Your computer is connected to a web of network switches that receive packets, determine whether they can connect directly to the intended recipient or which other switch is available to help locate the recipient. Each one of these switches moves up and down a topology (see below) that makes up the internet. Each packet might be forwarded by dozens of different network switches before it reaches it's final destination - just like the letter you send in the mail get's handled by many people before arriving at it's destination.

By slicing a message into packets, the network can route packets across the network independently - meaning packets belonging to the same larger message can take different paths through the network. This significantly aides in network congestion management and automatic load balancing, a primary function of all of the many millions of internet switches and routers making up the network. There's no analog to this in the postal/letter analogy - it's the equivalent of cutting your letter up into tiny pieces before sending :)

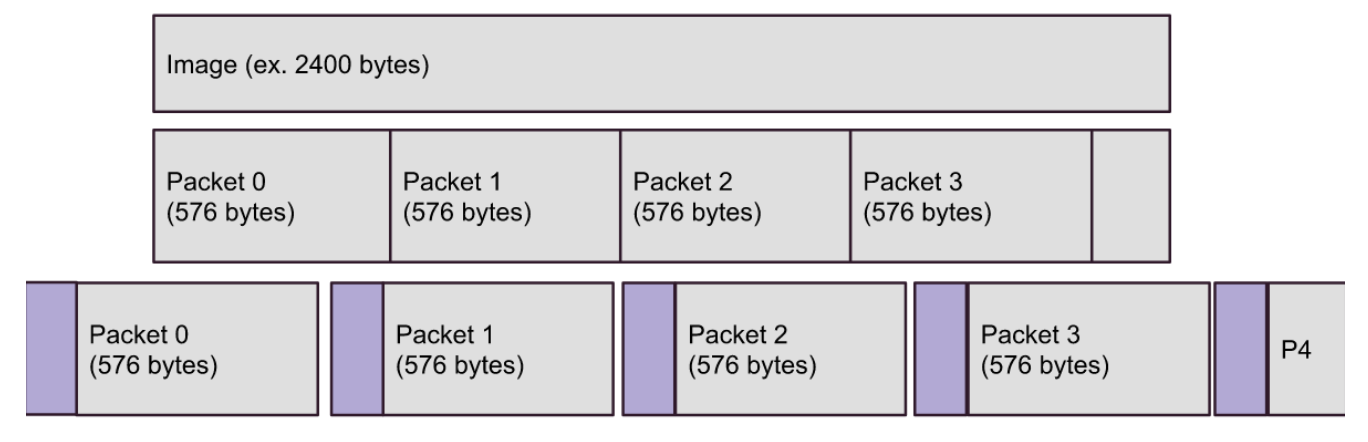

Let's look at a more concrete example. Suppose we are sending a 2.4kb image over IP. The minimum packet size that all IP hosts must be able to handle is 576 bytes. Hosts can negotiate sending larger packets, but at this point let's just assume packet sizes of 576 bytes.

Each packet will have a header attached to it, including IP version, total packet size (fixed), sender and recipient address, and routing flags such as sequence number. These packets (four of them, in the image below) are then sent across the network.

Note that in the image, packet 4 is smaller than the rest, it has the remaining bytes, less than 576. In reality, it will be sent as 576 bytes, with the remainder of the payload zeroed out.

Each packet flows through a network of switches. We will address a bit more on how these messages are routed across the network below, but for now the important concepts is that they travel through the network separately, and may take different paths. Packets belonging to the same message can arrive out of order (packet 3 may arrive at it's destination before packet 1). The IP protocol (the code implementing, at the operating system and device driver level) is responsible for re-assembling the packets in their correct order to form the resulting message on the recipients side.

Error Checking and Checksums

It's important to understand that whenever electronic data transmission occurs, we do have the possibility of errors. Computer networks send 1's and 0's over a medium, let's say radio frequency (wifi). Just like static when listening to your car's radio, transmission isn't perfect. As described above, when binary data transmission errors happen, the result is that a 1 is flipped to a 0 or a 0 is flipped to a 1. The result is still a valid binary data packet. In the best case, the resulting binary packet is nonsense, and easily understood to be corrupted. However, in most cases, the flipped bit results in a valid data packet, and it's impossible for a recipient to notice the bit flipping has occurred just by looking at the data.

For a concrete example, think about the IP message from above - and image. Images are sequences of pixels. Each pixel is three numbers, a value (typically) between 0 and 255 for red, green, and blue. For a reasonably sized image, there are thousands of pixels. Each pixel is barely perceptible to the human eye, but the composite gives us a nice crisp picture. What if one of those pixels was corrupted? One of the pixels that should look red, when it is received, is blue. How could a receiving program, which doesn't know what the image should look like, know that this has happened? The answer is, it's impossible - without some extra information.

The key to this problem is the concept of checksums. Checksums are hashes of a string of data. If you are familiar with has tables, you know the concept. For simple hash tables, you might take a large number and use the modulus operator to determine it's hash, and it's location in the table. Hashing functions exist to take arbitrarily long strings of data, and compute hash values from them that are substantially shorter.

Hashing functions are one way functions. They aren't magic, here's how it's done for all IP packets. Multiple (actually, infinite) inputs map to the same hash, however statistically speaking, the chances of two random inputs mapping to the same has is astonishingly low.

How does hashing relate to error detection? An IP packet has a payload (the actual data). This payload can be sent as input to the hashing function, resulting in a numeric value of just a few bytes. This checksum is then added to the IP packet header, and sent over the network.

When a machine receives a packet, the first thing it does is extract the payload data (a certain number of bytes) and the checksum from the packet. These are at well defined locations within the packet, so this part is quite trivial. Since all IP hosts use the same hashing function to compute checksums, the receiver can calculate the checksum of the received payload, and compare it with the checksum it found in the packet, which was computed by the sender originally.

There are 4 possible outcomes:

- One or more bits have been flipped in the area of the packet that held the checksum. This will result in the computed checksum being different than the checksum found in the packet, and the packet can be deemed corrupted.

- One of more bits have been flipped in the area of the packet that held the data payload. This will result in the computed checksum again being different than the checksum found in the packet, and the packet can be deemed corrupted. Note, there is an infinitesimally small chance that the bit flipping that occurred in the payload section resulted in a payload that still hashes to the same checksum. This would result in a false negative - the packet was corrupted by IP can't detected. Again, the chances of this actually happening are infinitesimally small.

- One ore more bits have been flipped in both the checksum and payload area of the packet. As in case #2, there is an incredibly small chance that this flipping results in the checksum changing such that the equally corrupted payload now hashes to the new checksum - however this is so unlikely we shouldn't even discuss it.

- No bit flipping occurs, the checksums match, the packet is accepted - hooray!

Recall that each IP message is sliced into many packets. If any packet within a message is corrupted, the entire message is dropped. This message drop can happen at the switch level (as it's moving through the network) or on the recipient machine. This is a hard drop - meaning that's it - the message is simply discarded. The sender is not notified. More on this to come :)

Ultimately, IP uses checksums to ensure the following: A message received by a program is the same message that was sent by the sending program.

Remember, however: IP does not ensure every message is received, and it does not ensure a sequence of messages are received in the same order they are sent.

IP Addresses

Thus far we've described what IP packets look like, to some extent. We've agreed that each packet has a header, and that the header has sender and receiver addresses. We have not defined what these addresses look like though. Let's work on that.

An IP address is made of four numbers, between 0 and 255, separated by dots (periods).

172.16.254.1

Actually, this is more specifically an IP v4 address. IP v6 addresses are more complex, and address the issue of potentially running out of IP v4 addresses (among other issues with v4). There is a lot to talk about regarding IP v4 and IP v6, but it's beyond the scope of a web development book - web developers will very rarely, if ever, deal with IP v6 addresses.

It's a 32-bit number, with each of the 4 numbers encoded as 8 bits. Every computer on the internet is assigned an IP address, however the vast majority are not assigned permanent IP addresses. When your laptop connects to a wifi switch, for example, it is assigned a temporary IP address which is unique within the sub network that wifi switch is managing. This is, in part, why we don't think we'll actually run out of IP v4 as quickly as we thought. Check out Network address translation for more on this.

Many machines, in particular machines that are frequently contacted by others, do have permanent of fixed IP addresses. These machines include routers and switches that act as gateways into other subnetworks, and servers (like web servers, database servers, etc). When your laptop or phone connects to your wireless service or wifi router, one of the first things it's doing is establishing/negotiating what machines it will use as the first hop for any outbound network messages. These first hop machines are often called gateways. Gateway machines maintain lists of other gateway machines, along with which subnetworks (subnets) they manage. Subnets are defined by ranges of IP addresses - for example, a particular subnet might be 172.0.0.0 through 172.255.255.255, and another machine within that subnet might manage IP addresses between 172.0.0.0 through 172.0.0.255. The idea is that routers and switches maintain registries of ranges of IP addresses they have connections with. When your computer sends a message to another computer, the message (IP packet) will be sent to your initial gateway machine, and then along any number of routers, being forwarded to eventually to the correct machine. Gateway machines actually maintain their registries through another protocol - the Border Gateway Protocol. Again, this is where we start to get outside of our scope, as a web developer, you will not often need to delve into the details of routing much further.

There are some special IP addresses that you should know about. Perhaps the most important is the loopback address - 127.0.0.1. 127.0.0.1 is always the current machine. If you send an IP packet to the loopback address, it will be received your own machine. You'll see this a lot in web development, because when you are coding things up, you are probably visiting your own machine via your browser! You will probably also use http://localhost for this too.

Some addresses are otherwise reserved - 0.0.0.0.0 is not used, 255.255.255.255 is a broadcast address, typically not used for anything related to web development. 224.0.0.0 to 239.255.255.255 are used for multicast (again, not used for most web development). There is more structure to IP addresses than we are discussing here - such as Class A, B, and C and their uses. You can actually see how the various ranges of IP addresses are allocated to top tier networks here, it's public data.

From our perspective as web developers, that's likely as far as we need to go in terms of addressing. IP addresses are numeric numbers, very similar to addresses on an postal envelope. Routers and switches are able to use IP addresses to route data through the network and to their destination.

Pro Tip💡 IP addresses are not the same as domain names. We are used to referring to machines using human readable names - https://www.google.com, https://webfoundationsbook.com, and so on. These domain names map to IP addresses, and they are transformed using publicly available and accessible databases. We'll cover this in the next chapter on HTTP and in particular, when we cover DNS.

IP Limitations

The Internet Protocol provides the baseline functionality of all internet applications, however it falls short in two specific areas.

- Error handling

- Multiplexing

First, we have the unresolved issue of error handling. IP detects corrupt messages, however it does not attempt to recover - it simple drops the messages. Since most applications communicate in sequences, dropped messages means there are gaps in communication. IP also makes no attempt to ensure messages arrive in order. Recall that each message you send is sliced into packets. Packets are small, to optimize their flow through the network. IP assembles packets back together on the recipient's end to form a coherent message, however two messages (each consisting of many packets) sent are not guaranteed to arrive in the same order. For example, if the first message was sliced into 100 packets (large message), and the second message was smaller (maybe 5 packets), it's very possible that all 5 packets within the second message arrive before each of the 100 packets from the first message. Out of order message may or may not be a problem for an application, but generally for web development it is.

The second problem is a bit more subtle. Imagine a scenario where you have two programs running on your computer. Each program is in communication with a remote machine (it doesn't matter if they are both talking to the same machine, or two different machines). What happens when an IP message is received?

Remember, the operating system is in charge of reading the IP message from the network device, and forwarding the message to the program that wants to read it. Which program wants to read the message?

IP actually doesn't define this, there is nothing within the IP message header that identifies the program on the specific machine that is waiting for the message. The operating system is not in the business of deciphering the contents of the message, and even if it was, it's difficult to imaging a fool-proof way for the operating system to already accurately figure out which program should receive the message. This example is describing multiplexing - the concept of having messages streaming into a computer and being forwarded to one of many programs currently running on the machine. It's sort of like receiving main to your house, and figuring out which one of your roommates should read it!